Student Projects

VE/VM450

Control of Virtual and Real Manufacturing Systems via VR/AR Techniques

Sponsor: Prof. Mian Li

Team Members: Zhiqi Chen, Tianyi Ge, Yue Wu, Yue Zhang, Junwei Deng

Instructor: Prof. Mian Li

Project Video

Team Members

Team Members:

Zhiqi Chen

Tianyi Ge

Yue Wu

Yue Zhang

Junwei Deng

Instructor:

Prof. Mian Li

Project Description

Problem Statement

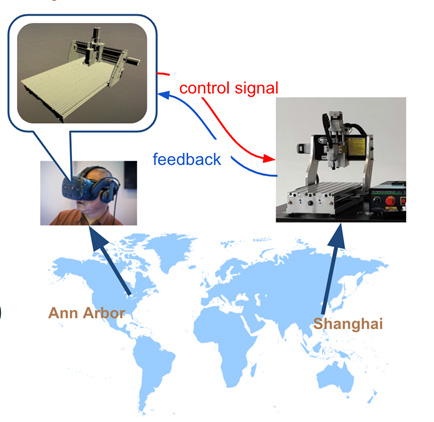

How can engineer access machine from distance in a pandemic like Covid-19? How can student learn how to use machine online? This project aims at building a Digital Twin of a CNC Milling machine, which uses virtual reality/augmented reality technology to synchronously show the manufacturing process and simulate the processing of a workpiece.

Fig. 1 Real-Time Remote-Control CNC Manufacturing System

Concept Generation

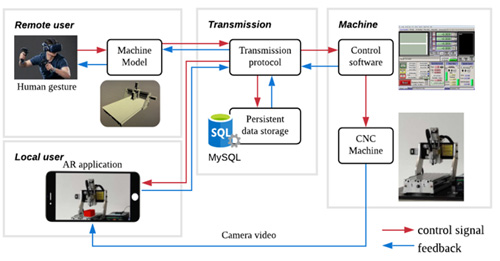

There are three main parts: user, transmission and machine. The remote users could control the machine by gesture and observe the machine with VR headset. The local user can use mobile APP to see a virtual workpiece rendered on the screen. The red arrow represents control signals input by the user; while blue arrows are real-time feedback from the machine. In order to synchronize the two ends, we need to build a robust transmission mechanism.

Fig. 2 Concept Diagram

Design Description

The design uses HTC Vive Pro as the VR terminal and smartphone APP as the AR terminal in the user side. The virtual milling machine and artifact is built by Unity 3D. Users can send control operation by interacting with the VR/AR interface. The operation signal will be transferred through socket connection and stored in an online MySQL database. For the machine side, we choose Mach3 to control the CNC milling machine and accept the operation from the users. Milling machines’ status can be sent back to the user terminal and the virtual machine will simulate the status in real time.

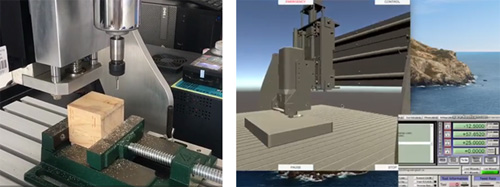

Fig.4 The whole VR set-up system

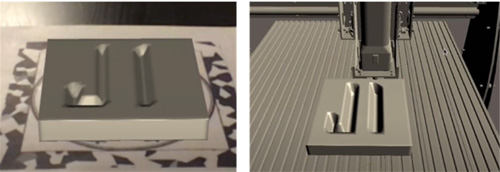

Fig. 5 AR & VR effect for the JI Gcode

AR Modeling

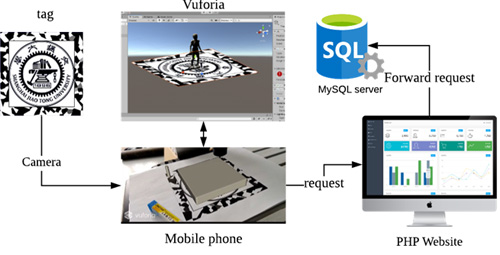

We use Vuforia Engine and design a specific tag that could be used for detection and localization. Different from direct SQL connection in VR, mobile device system requires a static website, where we use php query request api to retrieve data.

Fig. 6 AR Model Diagram

Validation

Validation Process:

For update frequency and latency validation, since these two variables are positively correlated, we sample the update frequency by tuning this parameter in MySQL settings, and record the consequent latency by Wireshark RTT tool. We expect points lying in the region of latency <= 200ms and frequency >=20Hz.

For deviation rate, we rasterize both the real object and the simulated surface, thus the euclidean distances between real and virtual trajectories should be controled under the threshold of 7%. We met all following specifications.

√ Update frequency>=20Hz

√ Average latency<=200ms

• Object deviation rate<=7%

√ Field of View>=200

√ Financial cost<=7,000

√ Database latency<=30ms

√ # supported operating system>=2

√ means having been verified and · means to be determined.

Conclusion

We successfully integrated a system that contains a data reading mechanism, a 3D Unity Model, an artifact articulation shader, a stable database, a real-time transmission protocol, a Vive VR environment and an IOS/Android AR application. Our product proves the effectiveness of Digital Twin, and huge potential on promoting smart factories and virtual learning.

Acknowledgement

Sponsor: Prof. Mian Li,

Speical thanks to: Zongyang Hu, Chenzhi Zhang, Tianyu Wang from UM-SJTU Joint Institute

Reference

[1] C. Perkins, 2019 augmented and virtual reality survey report