Student Projects

VE/VM450

Feature Point Detection of Calibration Boards for ADAS Camera Calibration

Sponsor: Huajun Guan, Jianning Yu, HASCO Visions

Team Members: Yinwei Dai, Yitong Jiang, Xuhua Sun, Jiaxing Yang, Tianchi Zhang

Instructor: Prof. Jigang Wu

Project Video

Team Members

Team Members:

Yinwei Dai,

Yitong Jiang

Xuhua Sun

Jiaxing Yang

Tianchi Zhang

Instructor:

Prof. Jigang Wu

Project Description

Problem Statement

Nowadays, the Advanced Driver-Assistance System (ADAS) is playing an increasingly important role in our driving experience and safety. To support the distance estimation function of the ADAS system, our project aims to implement a corner point detection algorithm, which is the first step of camera extrinsic parameter calibration.

![Fig. 1 Camera calibration for the surrounding view system [1]](https://www.ji.sjtu.edu.cn/wp-content/uploads/2020/07/450-8-pic01.jpg)

Fig. 1 Camera calibration for the surrounding view system [1]

Concept Generation

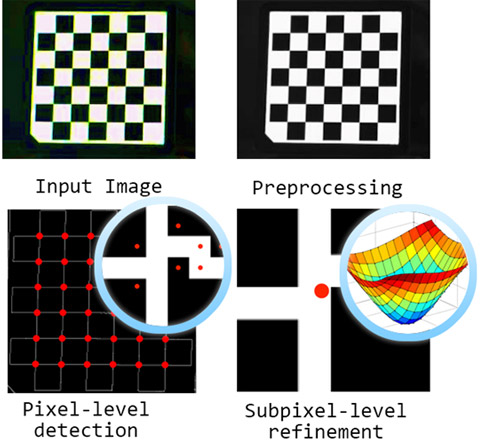

We decided to divide our algorithm into three sub-functions. The first function is the preprocessing of the image, where we will decide on several methods according to the need of future steps. Then, a pixel-level detection algorithm will be applied on the image, finding the corner point to a pixel level. The last step is to apply a subpixel-level refinement. Figure 2 is a flow chart visualizing the function-split.

Fig. 2 Concept Diagram

Design Description

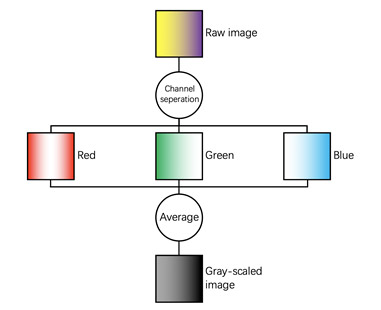

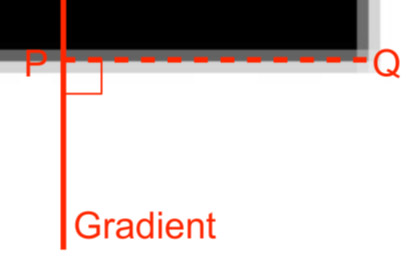

The algorithm is implemented in C++ and is compatible on multiple platforms. Image grayscaling will first be used to preprocess the input image before the Harris corner detection algorithm is being launched for the process of pixel-level detection. Then it will run a gradient based approach for subpixel–level refinement.

Fig.3 Flow chart of image grayscaling

![Fig.4 λ1, λ2 response of Harris corner[2]](https://www.ji.sjtu.edu.cn/wp-content/uploads/2020/07/450-8-pic04.jpg)

Fig.4 λ1, λ2 response of Harris corner[2]

Fig.5 Sample of image gradient

Modeling and Analysis

Applying a modular design, the three subfunctions can run independently and be connected easily having the corresponding output and input.

Validation

Validation Process:

In order to conduct validation on the design, a series of images of 5120 x 2880 pixels with 350 dpi is taken under various light intensity,

background and view angle. After upsampling the images to 12800×7200 using bilinear interpolation, manual labeling are done by group members and the average value are taken as the validation dataset. The input image is resized to 720p before our algorithm is launched for a test result. We then calculate the accuracy and record the limits of different conditions within which the algorithm can perform well.

Fig.5 Uneven background and 60 degree view angle

Validation Results:

According to validation part, most specifications can be met.

√ Detection Accuracy<=0.1 pixels

√ Program language=C++

√ Maintain accuracy in different visual angles and backgrounds

√ Multi platform compatible

Conclusion

Although the algorithm functions properly, some interference can still influence the result. Therefore, other approaches can be taken into consideration, including increasing the contrast ratio and extracting the pattern, so that a precise answer can be delivered eventually.

Acknowledgement

Sponsor: Huajun Guan, Jianning Yu from HASCO Visions

Prof. Jigang Wu from UMJI-SJTU

Tao Lu, Xiang Hao, Yibo Chen, Zhikang Li, Allen Zhu from UMJI-SJTU

Reference

[1]https://ieeexplore.ieee.org/abstract/document/6908613

[2]https://ai.stanford.edu/~syyeung/cvweb/tutorial2.html