Student Projects

VE/VM450

The ViWi Vision-Aided Millimeter Wave Beam Tracking

Team: Tracker

Group 17

Instructor & Sponsor: Prof. Chong Han

Shukai Fan, Yujian Liu, Xinhao Liao, Hantao Zhao, Yumin Zhang

Project Video

Team Members

Team Members:

Shukai Fan

Yujian Liu

Xinhao Liao

Hantao Zhao

Yumin Zhang

Instructor:

Prof. Chong Han

Project Description

Problem Statement

In 5G communication, millimeter wave is widely applied because of its high bandwidth. However, its range of coverage angles is narrow and struggles with accurate tracking. Whether the base station can correctly predict Angle of Arrival (AOA) based on history beam direction and vision-aided devices is crucial. In this project, instead of conventional methods, we applied deep learning methods on VIWI dataset, a simulating environments testing set accessible online.

Concept Generation

In our beam prediction models, numerous concepts in data pre-processing, sequence handling and image feature extraction are included. The core concepts are explained here.

• Gated Recurrent Unit [1]

GRU introduces gate unit control effect of memory, which is more flexible and can handle long-term dependency. It inherits the advantage of LSTM and simplifies its structure by combining the forget gate and input gate.

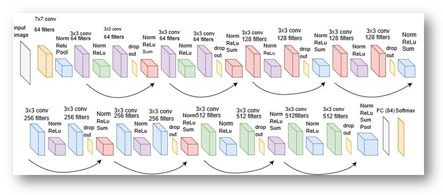

• ResNet-18 [2]

ResNet-18 is a convolutional-layer network. It introduces residual calculation, so that the networks can benefit from deeper structure. It is well pretrained for downstream tasks, thus requiring very limited memory and short training time.

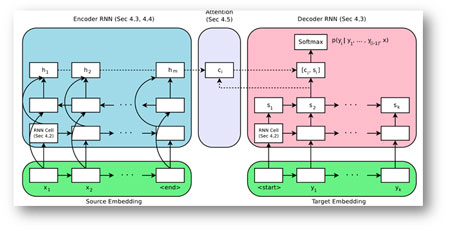

• Attention mechanism [3]

Attention mechanism is a new concept developed on sequence to sequence model. In original seq2seq model, encoder calculates the whole sequence into one vector, which leads to significant loss of information. Attention mechanism allows decoder to look back to the whole encoder output sequence. This prospectively increase the chances that model can make better predictions.

Fig.1 ResNet-18

Fig.2 Attention based seq2seq

Design Description

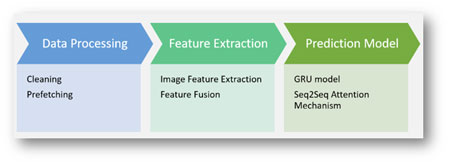

• Data Processing

The dataset needs to be preprocessed. We need to solve data leakage problem in the dataset, and prefetch images to accelerate loading process.

• Feature Extraction

Images contain information such as the positions of vehicles and potential reflective surfaces. By applying Resnet-18 to the images, these features in the images are extracted, and fused together as sequences.

• Prediction model

GRU model is applied to the input sequences. Weights are then added to the sequences using attention mechanism. Finally, the angle with highest probability is selected as the prediction value.

Fig. 3 Logic Flow

Validation

Validation Process:

For Viwi score, it can be calculated using the Viwi-Score formula.

For offline training time, we take the average of multiple training time.

For robustness, we manually introduce noises to the dataset, and check the fluctuation in performance.

Other specifications can also be determined using similar methods.

Validation Results:

According to validation part, most specifications can be met.

√ Top-1 Level-1 Accuracy: 86% >= 80%

√ Competition Score: 0.6 >= 0.35

√ Offline Training Time: 10h <= 12h

√ Online Prediction Time: 7ms <= 10ms

√ Disk Memory: 15GB <= 25GB

√ Robustness to Low SNR: 30dB <= 30dB

√ means having been verified

Conclusion

In our research, we develop a beam tracking system based on Resnet-18, GRU and attention based seq2seq model. The system achieves 86% Top-1 Level-1 accuracy and 0.667 score, which ranks top 1 in the Viwi competition [4]. It is also robust to environmental interference, and its memory consumption is reasonable. The model has ideal AoA prediction performance. We hope it can contribute to 5G development.

Acknowledgement

Instructor & Sponsor:

Chong Han from UM-SJTU Joint Institute

Reference

[1] Dey R, et al. Gate-variants of gated recurrent unit (GRU) neural networks. In 2017 IEEE MWSCAS. IEEE, 2017: 1597-1600.

[2] He K, et al. Deep residual learning for image recognition. In Proceedings of the IEEE 2016 CVPR. IEEE, 2016: 770-778.

[3] Luong, et al. Effective approaches to attention-based neural machine translation. In Proceedings of 2015 EMNLP. ACL, 2015: 1412-1421.

[4] https://www.viwi-dataset.net/challenge_ranking.html